Robotic process automation (RPA) has become increasingly popular as banks and other financial institutions (FI) look to quickly generate efficiencies. According to an early study, RPA supported operations cost from as little as a third of an offshore full-time employee (FTE) and as little as a fifth of an onshore FTE.1 RPA is a blanket term for software applications—“robot” or “BOTs”—that automate repetitive nonvalue-adding tasks (such as data entry, document creation, audit/search log creation, data transposition and processing) by mimicking the action of a user at the user interface (UI) level. Gartner defines RPA as “a productivity tool that allows a user to configure one or more scripts (which some vendors refer to as “bots”) to activate specific keystrokes in an automated fashion…[like] mimic or emulate selected tasks (transaction steps) within an overall business or IT process.

These may include manipulating data, passing data to and from different applications, triggering responses, or executing transactions.”2 It is an efficient and scalable solution for high-volume anti-money laundering (AML) processes performed manually, which are repetitive and driven by clear procedures such as alert reviews, case log generation, subpoena responses, etc. RPAs are application-independent, so these are literally plug-and-play solutions to drive operational efficiency. While RPA undoubtedly delivers quick fixes, it must be deployed in a thoughtful and sustainable manner to ensure acceptable operational risk. In fraud or AML operations (where 90% to 95% of all the work performed is eventually discarded as false positives), small input/output errors can lead to increasing levels of false negatives, missed escalations, lost transactions and the potential for significant rework.

This article will describe common use cases for automation and lessons learned from deploying RPA in the financial crimes risk management (FCRM) space.

FCRM Programs

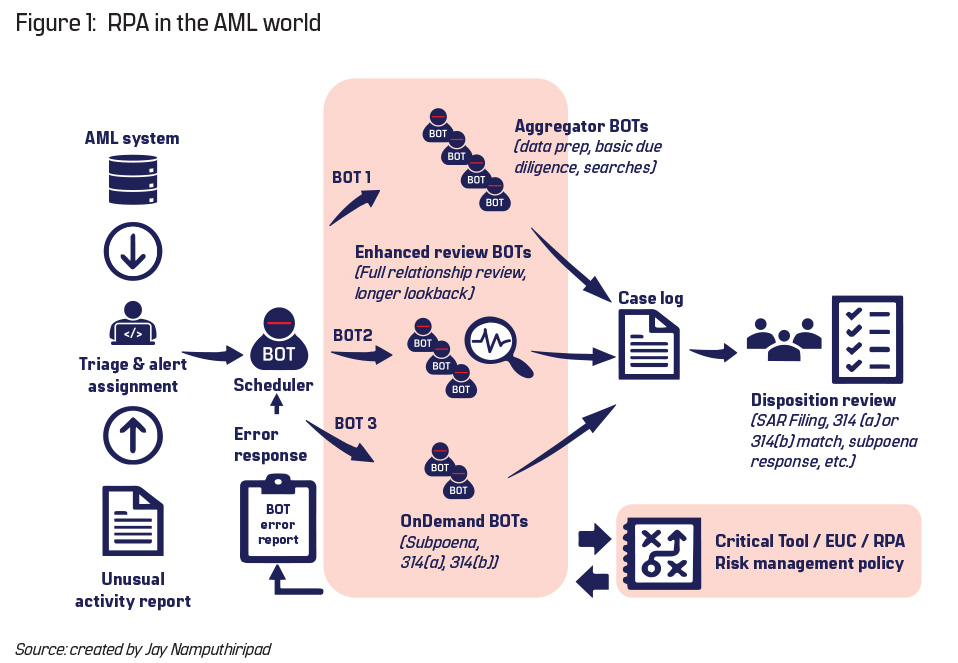

There are several ways to organize workflows, tools and teams in an FCRM program. One of the most commonly observed approaches is to use a multi-level process, where Level 1 analysts review alerts from an AML system, aggregate data around the alert as needed, conduct initial research and then disposition the alert using a combination of transaction patterns, red flags (e.g., structuring, human trafficking) and external reference database calls (e.g., LexisNexis, Refinitiv, Google, etc.). The results of this research are documented in a “case log” that is either integrated into the AML detection/workflow tool or is a standalone document. These analysts are trained in Bank Secrecy Act/anti-money laundering (BSA/AML) to perform only a minimum level of analysis in fraud trends and standard transaction patterns/red flags. Alerts that display nonstandard, emerging risk or complex data patterns are escalated with the “case log” to a more specialized Level 2 analyst. The creation of this initial case log is a logical place for a starter BOT, one that aggregates data over a certain period across disparate AML tools in an easy-to-review manner (aggregator BOT, as shown in Figure 1).

While RPA undoubtedly delivers quick fixes, it must be deployed in a thoughtful and sustainable manner to ensure acceptable operational risk

The Level 2 analyst performs more holistic customer-centric research, including a full relationship review across all lines of business, frequently expanding transaction reviews across a longer lookback period ranging from three months to one year. Level 2 reviews build upon the initial case log assembled by the Level 1 analyst. As part of this enhanced review, thousands of additional transactions may need to be integrated into the “case log.” This is another great opportunity to insert RPA into the workflow (enhanced review BOT, as shown in Figure 1).

This differentiated process helps an FCRM unit to build an efficient risk-based operating model that frees up more time for analysis, thinking and judgment rather than data collection, aggregation or preparation. Over time, this helps upskill staff across an AML unit with better outcomes all around.

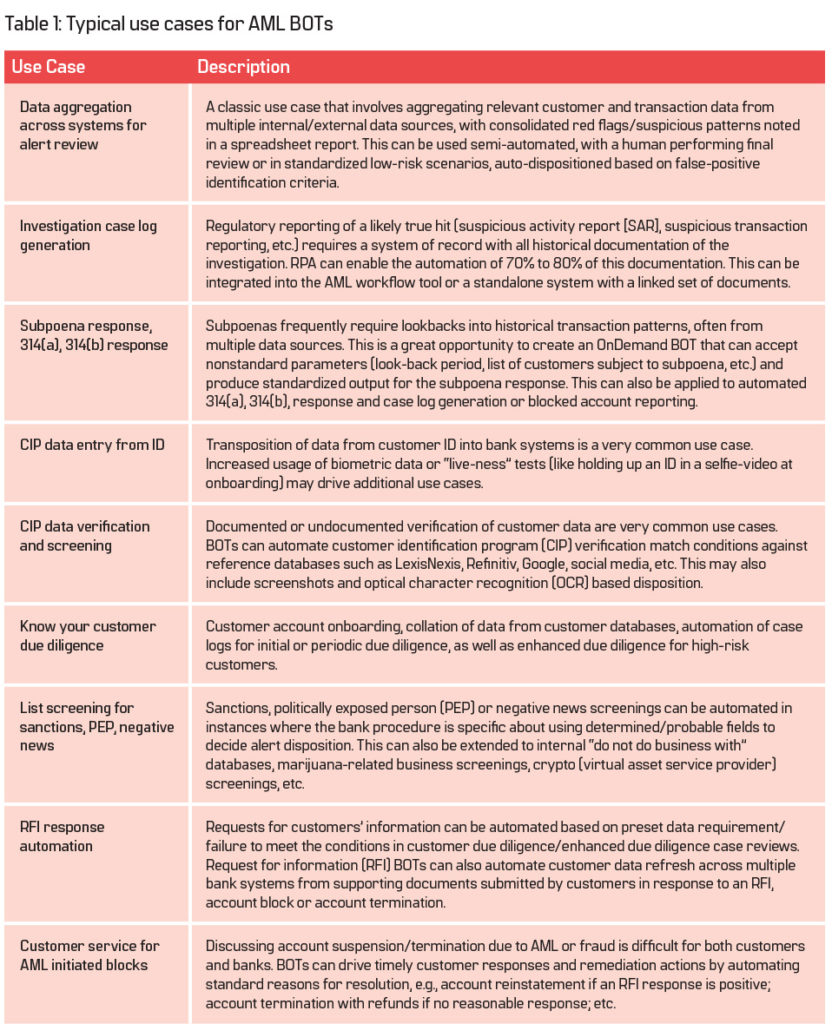

As an FI gains confidence and builds maturity in the RPA deployment process, many other use cases emerge. These can be automated individually or in logically related groups, depending on the FI’s specific operational challenges and risk appetite. Table 1, on the next page, lists some commonly observed BSA/AML and fraud risk use cases suitable for RPA.

As the number of BOTs in production increases, there may be a need for a scheduler/orchestrator BOT to trigger and manage BOT queues, including allowing unattended BOTs to run. Figure 1 depicts this as one that can be programmed to invoke certain automated queues, contingent upon meeting certain demand/capacity or regulatory triggers during triage by a human. This also allows for building “OnDemand BOTs” that can be invoked with custom parameters while still supporting a standardized RPA process and output. This is especially useful for those organizations that receive several subpoenas, each with its own nonstandard requirements, or those in the middle of regulatory lookbacks.

RPA Development and Deployment Process

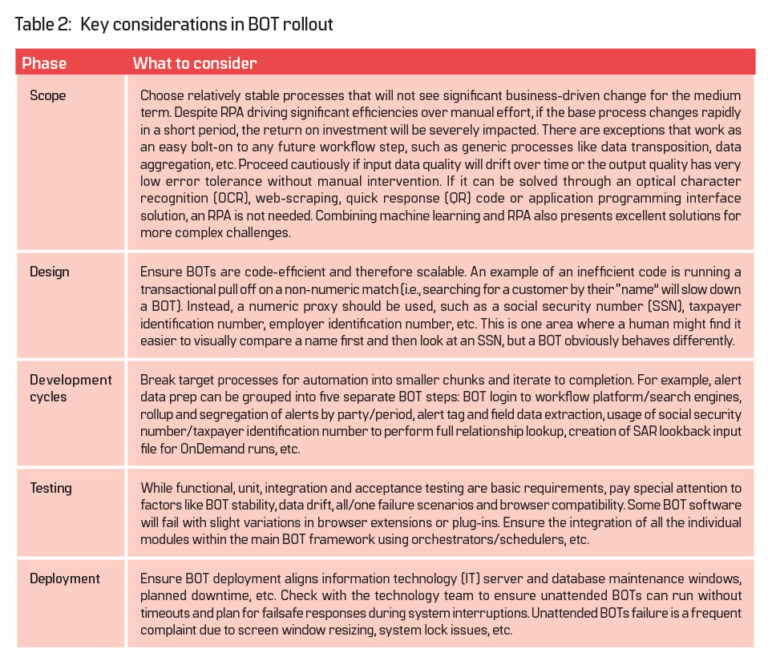

The RPA deployment process is similar to standard software rollout. RPA essentially adds a smart layer to an existing system or process, avoiding the need for deep functional or technical analysis of the underlying legacy application. However, it is crucial to engage a process specialist/AML small and medium-sized enterprise to document the current process in a manner that enables easy translation into automation code. Ten to 12-week agile deployment cycles are common and typical deployment includes scope, design, development and testing phases iteratively as noted in Table 2, in addition to descriptions of key considerations during various phases of BOT deployment.

Key Lessons Learned

BOT accuracy—Most BOTs fail in the first few runs, and accuracy numbers may be very low. Be careful about rolling something into production if the accuracy in user acceptance testing is not close to 99%. This may fly in the face of agile philosophy, but there are always nuances with production data that may not be adequately picked up in testing environments. Once in production, small errors can be magnified and it is important to plan for adequate post-BOT manual capacity to fix initial run errors. In addition, input data quality will drift over time, resulting in a 1% to 5% output error. Ensure that a robust post-BOT manual process is in place to detect and respond to errors from iterative agile rollouts or “data-drift” driven BOT errors in any case. As with any change, constantly measure the end-to-end process metrics, both pre- and post-RPA, to course correct.

Error and exception reporting—This is critical to ensure the success of the RPA initiative. BOTs cannot detect or respond to obvious errors the way a human can. A periodic report (adjust the frequency according to BOT run frequency) that tracks issues/errors in a timely fashion that goes out to the appropriate automation, business unit and IT stakeholders is very helpful. Ensure that run-of-the-mill bug fixes, as well as broader “input or output data drift” issues, are tracked and resolved to a conclusion. A review of this report over a broader timeframe can yield significant insight for code improvement. Any delays or flaws in timely exception reporting can cause significant error magnification down the line. For example, small volumes of missing transactions can raise the likelihood of missed SARs, requiring expensive long-period lookbacks.

BOT maintenance—Input or output data drift (in format, frequency, completeness) because of known or unknown extract, transform and load processes, or source data changes can cause BOT failure. Sometimes, BOT outputs may not fail, but the result is incomplete or corrupt and is not detected until much later, in a third-party audit or by regulators. The long-term sustainability of any BOT is suspect without a robust error reporting and remediation process. Simple data input errors (truncation, incomplete or missing files or blank fields) can be magnified and result in significant rework, and in worst cases, a lookback of all transactions may be required to ensure there are no missing AML trends or patterns.

BOT control documentation—BOTs can be considered a special type of end-user computing (EUC) tool, capable of operating on the frontend or backend in an attended or unattended fashion. As with any EUC, one of the fundamental considerations for any regulator would be the presence of robust documentation that covers robotic process automation/end-user computing policy associated risk assessment with a current risk/control inventory. BOTs can magnify errors a thousand-fold. Hence the presence of strong preventative or detective controls is very reassuring to regulators.

Conclusion

RPA is an effective and quick way of delivering process efficiencies in the FCRM space. Any BOT deployment should be paired with appropriate and adequate staff retraining on new workflow steps as part of a proactive change management outreach. Agile, iterative approaches are useful to follow, especially when layering in complementary technologies like machine learning or artificial intelligence. As is the case when embarking on any big change, keep a close watch on people, process and technology metrics to ensure success.

Jay Namputhiripad, CAMS, Global head, Financial Crimes Risk Management, The Bancorp, jayanamp@yahoo.com

- “Robotic Process Automation—Robots conquer business processes in back offices,” Capgemini Consulting and Capgemini Business Services, 2016, https://www.capgemini.com/consulting-fr/wp-content/uploads/sites/31/2017/08/robotic_study_capgemini_consulting.pdf

- “Robotic Process Automation,” Gartner, https://www.gartner.com/en/information-technology/glossary/robotic-process-automation-rpa