Anti-money laundering (AML) models historically relied on qualitative, expert judgment components. Today, these models still rely on expert judgment but are coupled with more sophisticated scoring algorithms and have other quantitatively derived components, such as segments and thresholds. The increased sophistication of modeling techniques and the broader application of models have undoubtedly played key roles in the rapid growth of the AML industry. As the quantitative rigor increases behind these AML models, regulatory bodies are involving analytical and statistical specialists in their AML examinations and reviews. AML models must follow the guidance of OCC Bulletin 2011-12, which describes elements of a sound program to effectively manage model risk, namely the need for independent review and model validations. At the same time, the widespread adoption of these AML scenarios and typologies being considered as models has introduced new risk management challenges. Very simply, how do we know that our AML models are working as intended?

Across the industry, we hold an appreciation for the potential improvements that could result from greater collaboration among industry leaders, academic researchers and regulators. Given the reputational implications associated with the accuracy and effectiveness of AML models, issues concerning model validation are of obvious concern to the industry. Erroneous or misspecified models may lead to expensive look-backs or other regulatory fines. Topics discussed herein aim to provide clarifications and specific suggestions for improving the AML model validation process.

Define the Model Environment

When approaching AML model risk management, the first activity is to define the population of AML scenarios, typologies and methodologies used in a financial institutions' (FIs) transaction monitoring environment. Having a complete inventory of all scenarios, typologies and methodologies within the AML environment, the model risk practitioner will then evaluate each scenario against the definition of a model. Models are defined by the OCC Bulletin 2011-12 as a quantitative method, system or approach that applies statistical, economic, financial or mathematical theories, techniques and assumptions to process input data into quantitative estimates. In the AML world, this definition is applied to transaction monitoring models:

- using more than string (if/then) statements; and

- using quantitative rigor and methodologies to optimize thresholds, scoring events, segmentations and conducting above/below-the-line analysis.

AML models include transaction monitoring software vendor products, large homegrown transaction monitoring systems, customer risk rating models (if with a quantitative scoring component) and alert risk scoring models (again, if with a quantitative scoring component). A transaction monitoring product, in its most basic form, is not a model. However, when in production and coupled with optimization methodologies and rigorous segmentation delineations, this added quantitative rigor is what classifies the entirety of the product as a model.

Furthermore, individual methodologies are eligible, and generally considered, models due to their quantitative nature. Example methodologies include those used to define the process of optimizing thresholds, scoring events, producing segmentations and conducting above/below-the-line analysis.

Model Ranking and Model Controls

Once the models have been determined, the risk practitioner should then use a ranking/tiering process to categorize the models into levels of risk, so as to establish appropriate model controls in correlation to the risk each model represents. Most FIs have a model governance group that ranks the risk of models across their FI using some variant of low/medium/high type risk. Since OCC 2011-12 was published, AML models have been ranked in our industry inconsistently and validating AML models was a foreign concept.

Like many unknowns, the precedence quickly became to treat AML scenarios/typologies as models and to treat them all at the highest level of risk. Where the reputational risk is high, the quantitative rigor and financial impact associated with AML models is low. Now that many practitioners, statisticians, regulators and financial quants have looked "under the hood" of these AML models, we find AML models do not warrant a high-risk classification. Classifying AML models as either low risk or medium risk is more consistent with the mathematical/statistical rigor inside AML modeling.

Once models are tiered, appropriate model controls can be established to the level of risk each model presents. The higher the risk, the tighter the model controls will be and the optimization and validation will be more rigorous and frequent. The lower the risk, fundamental model controls will need to be established and optimization and validation will be less frequent than higher tiers. Consequently, the frequency of optimization and validation is discussed below in correlation to the established tier/rank of a model.

There are a number of compelling reasons AML programs should pay attention to model validation, which is essentially an activity conducted to monitor a model's performance. Size and scale considerations are driving factors that increase the importance of carefully monitoring a model's performance. Age is another factor. Many AML scenarios/typologies were constructed five to 10 years ago without revision. Models should be optimized/tuned every 12 to 18 months. An independent validation, by a team other than the analyst that optimized the model, should be performed at the same frequency. Using the risk ranking process from above, higher ranked models would be optimized/validated closer to the 12-month cycle; whereas, lower risk models would be optimized/validated closer to the 18-month cycle. Some key typologies may not be classified as models, because of a FIs particular model definition. However, these non-models should also go through an optimization and validation process, but at a slower frequency of every 24 to 36 months.

Model Validation

Having now defined the model environment, the associated model risk tier, and the frequency of optimization/validation activity, AML practitioners may begin the model validation process. As model risk becomes a bigger factor in the overall risk consideration of FIs, model validation becomes paramount. Model risk management is a process wherein AML practitioners must 1) be able to demonstrate to senior management and regulators how their models are performing against expectations and 2) know how risk exposures fit within defined bands of acceptability.

Unlike traditional market and credit risk modeling, AML models do not produce a definitive outcome that can be back-tested against a prior dataset. AML models aim to identify unusual activity that, upon investigation, may be suspicious. AML models are not intended to capture proven suspicious activity. The true productivity of an AML model is unknown, as even the best data scientist cannot prove whether 100 percent of all money laundering activity was detected. AML model's "productivity" definition may vary model-to-model; because true productivity is unknown, it is important to associate productivity with the model's intended use, as discussed below.

Validation processes, and related documentation and reporting, will need to be consistent and clearly tied to a model's purpose. AML executives need to consider:

- How do we integrate model purpose and performance expectations into validation processes?

- How do we establish relevant tolerance metrics in validations?

- What happens when we determine our models are not working as intended?

- What are appropriate monitoring standards?

- How can we recognize and document the role of judgment in validation processes?

Many of these questions have technical components that are generally addressed with detailed statistical considerations. Recognizing there is not a common yardstick by which AML modeling can be measured, AML practitioners can utilize a framework for thinking about how model purpose, model use and expectations for results play into the evaluation of AML models.

Framework for Evaluating AML Models

It is important to articulate/document a model's purpose, use and expected results. Documentation should include key input/output decisions made during a model's development and/or implementation. Documenting the expected outcome and expected results of a model is also a critical component, however, more challenging to define. Expected results can be captured using standard statistical tests, such as the Gini coefficient and the K-S statistics. FIs seem to preoccupy themselves in selecting the best sampling methodology and/or statistical performance analysis method. Developing effective processes and exercising sound judgment are equally as important as the particular statistical measurement technique used.

Validation methodologies should be closely associated with how the model is used. For example, in cases where a FI uses a cluster model, validation criteria should include evaluations of the model's ability to attain high intra-cluster similarity and low inter-cluster similarity. However, if a FI only uses the rank-ordering properties of the score, validation should concentrate on the model's ability to separate risk over time. These metrics and measurements are not applicable to all scenarios across all FIs. The more general point is that when evaluating a model's performance, one must depend critically on a clear understanding of the model's intended use.

In development, modelers should establish a clear yardstick for a model's purpose. Models must be validated relative to clearly understood expectations. Rather than establishing some arbitrary statistical criterion for a model's performance, the central question for validation is whether the model is working as intended, robust and stable over time, and producing results that are at least as good as alternative approaches. A clear understanding and documentation of expected performance is a necessary and fundamental basis on which all validation approaches must be built.

While there is general agreement that the validation process is part science and part art, there is a need to establish clear quantitative criterion as part of the validation process. Such criteria need not be the sole measure of model performance, but it is necessary for the establishment of scientific rigor and discipline in the validation process. Classification and clustering models are discussed below with associated, recommended validation tests.

Some transaction-monitoring scenarios can be categorized as classification models. Classification models should be evaluated simply based on how well they separate "good" and "bad" account behavior over time. One common approach is to consider some measure of divergence between "goods" and "bads."

An effective classification tool should result in accepting a high proportion of "goods" consistent with expectations. Common measures of a classification model's ability to separate risk include the K-S statistic, the ROC curve, confusion matrix and lift statistics. If your model has a scoring component, a related consideration is to evaluate the extent the predictions of the scoring model are better than randomly-generated predictions over time. In a perfect case, 100 percent of true positive responses being accurately predicted in the top quartiles. Instability in ordering would suggest that the model is not capturing the underlying and relatively constant information about how risky different accounts are.

Other transaction-monitoring scenarios utilize clustering analysis. Similar to classification, clustering is used to segment the data with the difference being segments are not predefined. In other words, clustering defines segments based on the data and classification partitions data into preset groups. Popular methods of clustering analysis include Ward's minimum variance method, McQuitty's method and Geometri centroid. Both external and internal validity measures have been utilized to evaluate the clustering structures. External validity measures how close is a cluster to a reference while internal validity measures intrinsic characteristics of a clustering from both absolute and relative perspectives. Whether a model practitioner uses the McQuitty method or a ROC curve, the emphasis here is to evaluate a model's performance using a statistical method aligned specifically to the model's intended use.

Clear quantitative criterion has been suggested above for a validation process; however, one quickly realizes the role expert judgment, usually by compliance personnel, has as part of the development and ongoing monitoring of AML models. At the most basic level, the construction of any statistical transaction monitoring model/typology/scenario requires some element of judgment. Judgment is absolutely permissible in AML modeling, but needs to be implemented carefully. Model risk practitioners should look for consistency. Consistency is a critical factor, and judgmental input must be controlled and managed with the same precision used with other model inputs. For example, when judgmental inputs are inconsistent and subject to frequent changes, the model becomes superfluous and should be either abandoned or revised. The perceived need for constant change and recalibration is likely a sign that the model is no longer functioning as intended and needs to be replaced.

FIs should focus on documentation, a point often echoed by the regulatory community

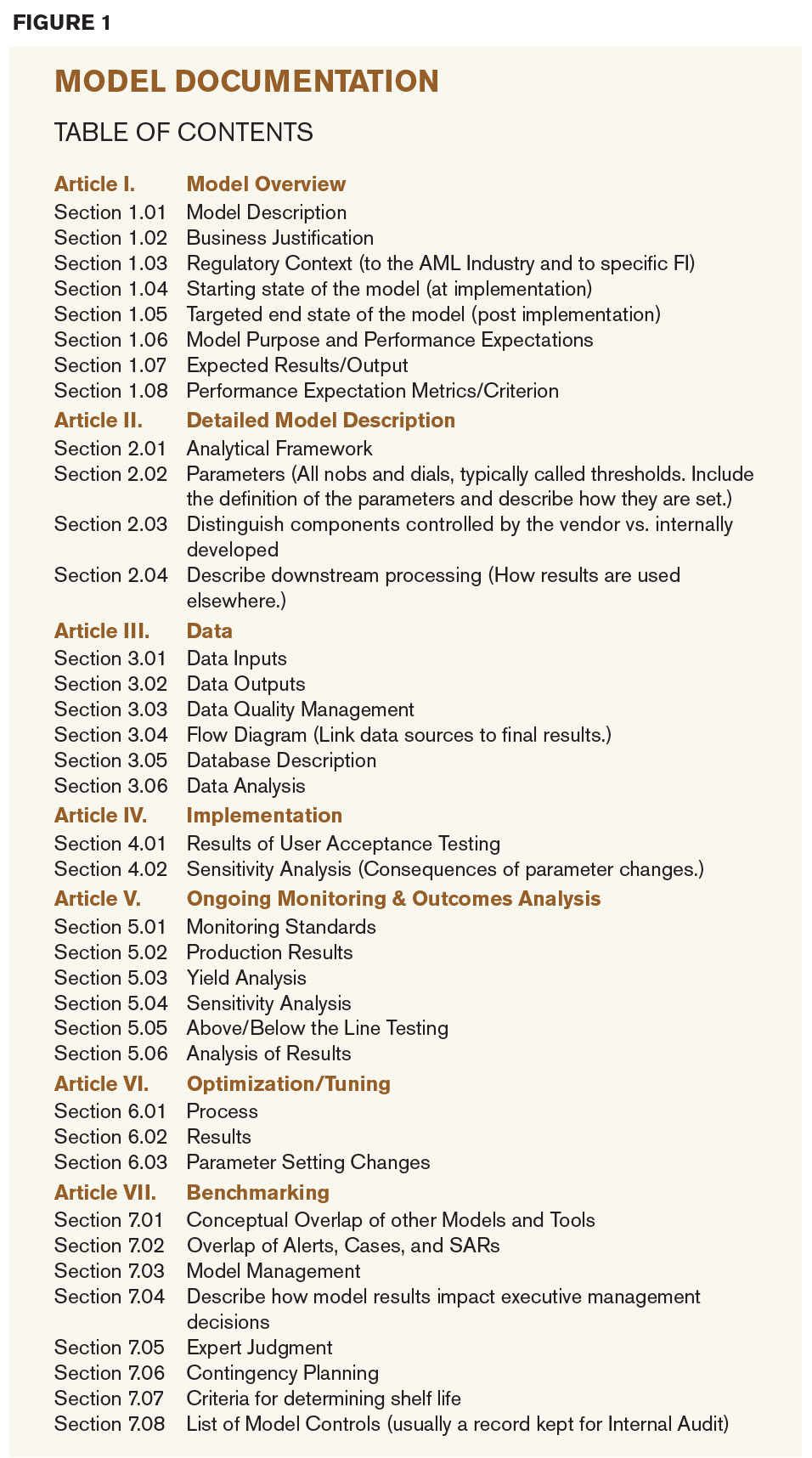

FIs should focus on documentation, a point often echoed by the regulatory community. Figure 1 displays a suggested table of contents for a model ranked/tiered as high risk.

A recommended section from the last portion of the table of contents, Model Management, is often missing from model documentation. The lack of documentation of judgmental processes is an all too common deficiency found in FI exams. External regulators, internal auditors and internal model governance groups all have a need to understand how judgment is being employed and how well outcomes matched expectations or previous performance. While model developers should have clearly established expectations of how a model will perform and how a model should inform management decisions, they should also have criteria that elicit managerial review to determine whether a model has come to the end of its useful life.

To conclude, validation, and more generally risk management, is an entire process that requires interplay between effective managerial judgment and statistical expertise. It is not simply establishing a set of statistical benchmarks. Bank regulators and policymakers recognize the potential for undue risk that can arise from model misapplication or misspecification. Examining and testing model validation processes are becoming central components in supervisory examinations of banks' AML practices. Documentation is a critical component of the entire process. A model's development, implementation, and use (including expert judgment where applicable) should all be detailed and retained in a model document repository. A validation process and testing should also be documented and retained. The above was intended to establish introductory, fundamental industry best practices of AML model risk management; future research can address targeted subjects more thoroughly, such as the establishment of model controls, validation procedures, optimization and tuning methodologies, risk scoring, segmentation methodologies and above/below-the-line analysis programs.